Why This Matters Now

AI is transforming every industry—but the risks are escalating just as fast. In 2025, Gartner predicts that 70% of enterprises will face formal AI governance requirements.

The question is no longer:

“Do we need AI governance?”

But rather:

“How fast can we prove it?”

Global regulations—from the EU AI Act to NIST’s AI RMF—are turning Responsible AI best practices into enforceable standards. Falling behind doesn’t just risk non-compliance—it means lost deals, reputational damage, and costly remediation.

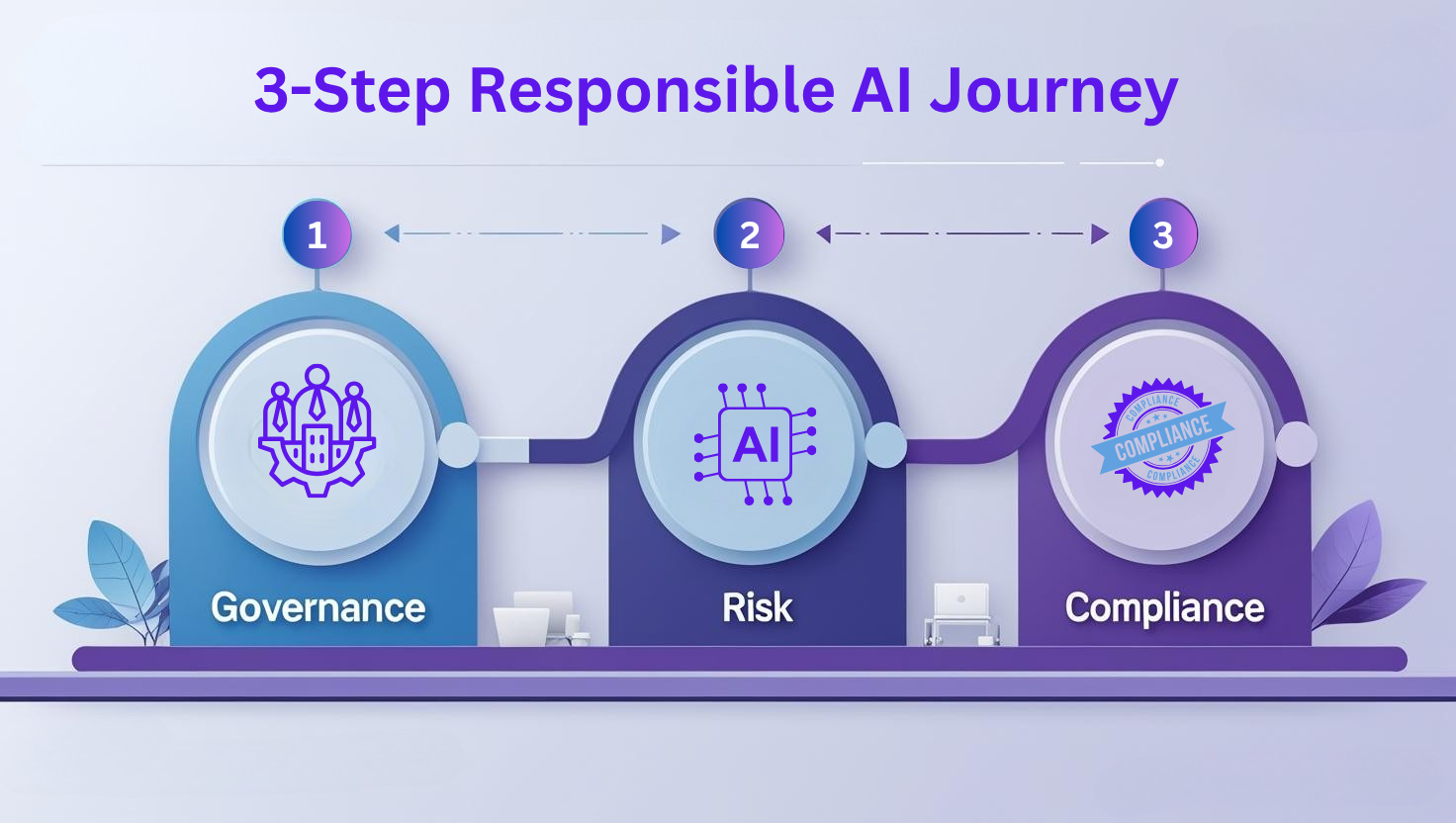

CognitiveView makes the path forward practical. Our 3-step roadmap—Governance, Risk, Compliance (GRC)—gives any organization a clear, standards-aligned way to assess, monitor, and scale Responsible AI:

- Across industries: finance, healthcare, HR, public sector, cybersecurity

- At any maturity stage: from GenAI experimentation to full-scale deployment

- With evidence built in—not just intent

Step 1 – Governance: Assess and Publish Your AI TrustCenter

Goal: Gain instant visibility into your AI posture—and communicate it with confidence.

What Happens:

- Complete a self-guided assessment mapped to frameworks like NIST, EU AI Act, and sector-specific principles in privacy, healthcare, and financial services.

- Instantly surface gaps across transparency, bias, privacy, and accountability.

- Auto-generate a branded AI TrustCenter—a public or private page that summarizes your Responsible AI stance, ready for sharing with stakeholders.

Who Cares & Why:

- CEO / Chief Compliance Officer: Strengthens trust narrative and accelerates strategic deals.

- Procurement Teams & Buyers: One link answers Responsible AI due-diligence—no back-and-forth emails.

Real‑World Example:

A SaaS startup reduced procurement cycles from 8 weeks to just 1 week by embedding their AI TrustCenter in sales decks—instantly answering client compliance and governance questions.

Key Outcomes:

✅ A clear, defensible governance story—aligned to international standards

✅ Up to 40% reduction in procurement friction (internal benchmark)

✅ Low lift—fully self-service, zero engineering required

Step 2 – Risk: Implement Observability & Model Monitoring

Goal: Proactively detect and mitigate risks across GenAI, agent-based, and predictive models.

What Happens:

- Use Auto-Discovery to connect with standard MLOps platforms like Azure ML, SageMaker, or MLflow—instantly surfacing registered models and pipelines.

- For custom agents, GenAI systems, or predictive models, run TRACE by uploading evaluation metrics (CSV) or submitting via API.

- Continuously monitor accuracy, drift, fairness, hallucination rates, and more.

- Trigger risk alerts and dashboards for both data science and compliance teams.

Who Cares & Why:

- Head of Data Science: Prevent fire drills, iterate faster, and validate new architectures (LLMs, agents, etc.)

- Chief Risk Officer: Real-time assurance across all AI types—not just those tied to legacy MLOps pipelines

Real‑World Example:

A regional bank used Auto-Discovery to connect MLflow and spot drift in a credit risk model. For a new GenAI agent, TRACE detected hallucination spikes in summarised financial advice—helping prevent reputational risk.

Key Outcomes:

✅ Unified observability for both traditional ML and GenAI/agentic models

✅ Audit-ready risk signals across fairness, bias, drift, and hallucinations

✅ Easy integration—connect via platform, or run TRACE via API/CSV

✅ Moderate effort—low lift, enterprise-grade coverage

Step 3 – Compliance: Align With NIST, EU AI Act, ISO 42001

Goal: Prove Responsible AI compliance—backed by audit-ready evidence.

What Happens:

- Map each control to globally recognized frameworks like NIST AI RMF, EU AI Act, ISO 42001, and Singapore’s AI Verify.

- Automate evidence collection, control testing, and gap analysis—all tied to real model performance.

- Export regulator-facing reports and board summaries in one click using TRACE’s compliance engine.

Who Cares & Why:

- Chief Compliance Officer & Governance Teams: Slash audit prep time, reduce manual compliance overhead.

- Regulators & Clients: Gain confidence from verifiable artefacts, not just policy PDFs.

Real‑World Example:

A healthcare provider used CognitiveView to validate compliance across its diagnostic AI models. TRACE linked model metrics to EU AI Act risk categories, cutting external audit time by 60% and boosting internal stakeholder trust.

Key Outcomes:

✅ Demonstrable compliance with global Responsible AI frameworks

✅ Lower legal and regulatory risk exposure

✅ Strong external signal to customers, auditors, and investors

✅ Higher effort—includes guided workshops, deep control mapping, and multi-role collaboration

Start Free. Scale With Confidence.

Subscriptions Aligned to Your Responsible AI Journey

We offer a free TRACE health check to help you quickly assess fairness, drift, and compliance risks—no integrations required.

From there, our plans follow the Governance → Risk → Compliance maturity model, allowing you to scale your Responsible AI practices at your own pace:

🟢 Starter — Launch Governance

For teams just starting their Responsible AI journey

- Self-service assessment

- Single branded AI TrustCenter

- Baseline mapping to NIST, EU AI Act, and sectoral principles (e.g., healthcare, privacy)

✅ Ideal for earning early credibility with customers, investors, and internal stakeholders

🟡 Growth — Scale Risk Oversight

For teams building out model monitoring and cross-functional collaboration

- Everything in Starter

- Model observability dashboards (powered by TRACE)

- Multi-domain TrustCenters and audit collaboration workflows

✅ Built for data-science, risk, and compliance teams to stay in sync

🔵 Enterprise — Assure Compliance

For teams needing full-scale audit readiness and regulatory alignment

- Everything in Growth

- Continuous control monitoring

- Vendor discovery and model inventory

- Private/hybrid deployments

- Audit-ready evidence aligned with NIST, EU AI Act, ISO 42001, and AI Verify

✅ The choice for regulated industries and enterprise-grade compliance teams

Our Vision: Responsible AI for Everyone

We believe every organization—no matter the size or industry—deserves the ability to deploy AI responsibly without facing prohibitive costs or complexity.

That’s why we’ve designed a journey that starts simple and grows with you.

By breaking Responsible AI into small, self-service steps, you get quick wins that build into long-term trust and compliance.

Need help along the way? Our experts are ready when you're ready—with guided assessments, deep audits, and tailored support.

Start where you are.

Grow at your pace.

Build AI that earns trust.

Open‑Source Evaluation & Best Practices

At CognitiveView, we embrace the speed of AI innovation—without locking you into black-box solutions.

We fully support open-source evaluation frameworks such as DeepEval for LLMs and leading agent-evaluation toolkits, enabling your ML team to move fast and stay compliant.

What We Provide:

- ⚙️ Pre-built integrations & sample code to plug directly into your existing CI/CD or MLOps pipelines

- 📚 Curated best-practice playbooks covering data prep, metric selection, and evaluation setup

- 🧭 Framework-agnostic guidance, so you don’t get locked into tools that may not scale with your use case

- 🔍 Transparent, reproducible assessments—your engineers stay in control, using trusted tools from the open-source community

Key Takeaways

✅ Follow the sequence: Governance → Risk → Compliance. Skipping steps creates blind spots.

✅ Data-driven oversight: Real-time metrics beat once-a-year audits.

✅ Regulatory alignment: NIST, EU AI Act, ISO 42001 baked in from the start.

✅ Scalable pricing: Start small, expand confidently.

Ready to Take the Next Step?

Start your Responsible AI journey today—without the guesswork.

🚀 Try the free TRACE healthcheck

🧪 Explore our interactive demo

📊 Compare plans that grow with you

📅 Book a 30‑minute consultation with our team

Responsible AI isn’t optional anymore—

It’s your competitive edge.