AI and Privacy: A Growing Concern

AI is transforming industries, from personalized healthcare to real-time fraud detection. But as AI systems process vast amounts of data, concerns over privacy, security, and regulatory compliance have surged. How can businesses harness the power of AI while ensuring they protect user data and comply with evolving regulations?

With the rise of the EU AI Act, GDPR, and NIST AI RMF, organizations must rethink how AI interacts with sensitive information. Failing to address AI privacy risks can result in reputational damage, regulatory fines, and loss of consumer trust.

The Key AI Privacy Challenges

1. Data Collection & Consent Issues

AI systems rely on large datasets to train models. However, organizations often face challenges in obtaining clear user consent for data usage. Many users are unaware of how their data is collected and processed by AI models.

🔹 Example: Facial recognition AI in retail stores has sparked concerns as customers are often unaware their biometric data is being collected.

2. Data Anonymization & Re-Identification Risks

While anonymization techniques like differential privacy and data masking help protect user identity, AI models can still infer personal details by analyzing patterns in anonymized data.

🔹 Example: A 2019 MIT study found that AI could re-identify individuals from anonymized health records with up to 95% accuracy by cross-referencing datasets.

3. AI Model Transparency & Explainability

Many AI systems operate as black boxes, making it difficult to explain how decisions are made. This lack of transparency raises concerns in areas like finance and healthcare, where AI-driven decisions impact individuals directly.

🔹 Example: AI-based credit scoring systems have faced scrutiny for discriminatory lending decisions, leaving applicants without a clear understanding of why they were denied loans.

4. Data Security & Cyber Threats

AI models require continuous access to sensitive data, making them prime targets for cyberattacks. Model inversion attacks and adversarial attacks can expose confidential information or manipulate AI predictions.

🔹 Example: In 2022, researchers demonstrated how AI models trained on medical data could be reverse-engineered to reveal patients' personal health information.

5. Compliance with Global AI & Data Protection Regulations

With AI-specific regulations emerging, organizations must ensure their AI systems align with laws like: ✅ GDPR (General Data Protection Regulation) – EU

✅ CCPA (California Consumer Privacy Act) – US

✅ EU AI Act – AI Risk Categorization & Compliance

✅ NIST AI Risk Management Framework – US AI Security & Ethics

✅ ISO 42001 – AI Management System Standard

Failing to meet these regulations can lead to hefty fines and operational restrictions.

Best Practices for Ensuring AI Privacy & Data Protection

1. Implement Privacy-Preserving AI Techniques

To minimize privacy risks, organizations should adopt advanced privacy-enhancing technologies:

- Federated Learning – Allows AI models to be trained across decentralized devices without centralizing sensitive data.

- Differential Privacy – Adds statistical noise to datasets to prevent re-identification.

- Synthetic Data – Generates artificial data that mimics real-world datasets without exposing personal information.

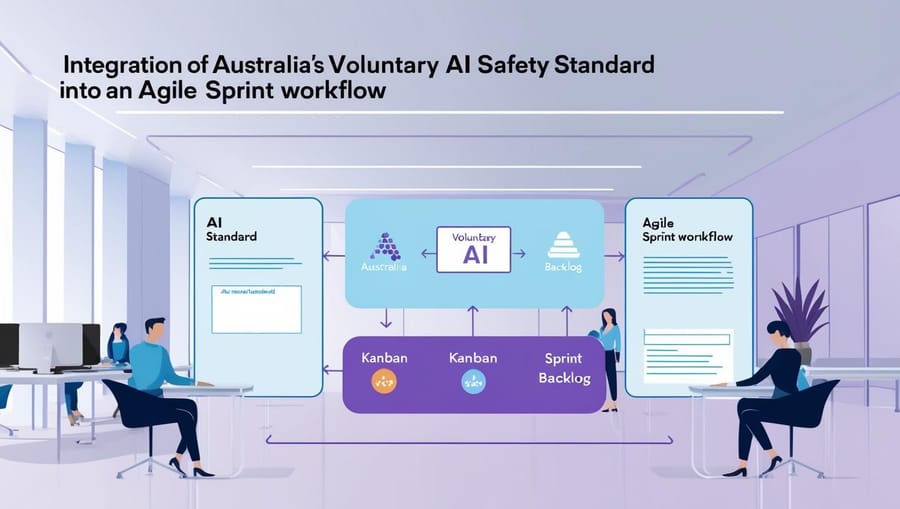

2. Strengthen AI Governance & Compliance

- Establish AI governance frameworks aligned with NIST AI RMF & ISO 42001.

- Conduct AI privacy impact assessments (PIAs) to evaluate risks before deployment.

- Maintain detailed documentation on AI decision-making processes to ensure transparency.

3. Enhance Data Security in AI Systems

- Encrypt sensitive data used in AI training to prevent unauthorized access.

- Use access controls and zero-trust security models to protect AI infrastructure.

- Monitor for adversarial attacks that attempt to manipulate AI outcomes.

4. Ensure Transparency & Explainability

- Use explainable AI (XAI) techniques to make AI-driven decisions understandable.

- Provide users with clear opt-in and opt-out mechanisms for data usage.

- Adopt AI fairness audits to detect bias and unintended privacy risks.

Industry Use Cases: AI Privacy in Action

🏥 Healthcare: Protecting Patient Data with Federated Learning

AI models trained on patient data must comply with HIPAA & GDPR. Federated learning is being used to analyze medical records across hospitals without exposing sensitive data.

🏦 Finance: AI-Powered Fraud Detection & Compliance

Banks use AI for real-time fraud detection, but they must ensure compliance with GDPR & the EU AI Act by anonymizing transaction data and using explainable AI models.

🛒 Retail & E-commerce: Privacy Risks in AI-Driven Personalization

Retailers use AI for personalized recommendations, but improper data handling can violate CCPA & GDPR. Businesses must ensure user consent mechanisms and data minimization practices.

🚗 Autonomous Vehicles: AI & Real-Time Data Privacy

Self-driving cars collect vast amounts of location and biometric data. Ensuring privacy-by-design and encryption in AI-driven vehicles is critical to regulatory compliance.

Future Trends in AI Privacy & Data Protection

🔹 AI Regulations Will Continue to Evolve – The EU AI Act will set global precedents for AI privacy, with more countries introducing similar laws. 🔹 Rise of AI Audits & Certification Standards – ISO 42001 and other AI compliance certifications will become standard. 🔹 Greater Use of Privacy-Preserving AI – Federated learning and synthetic data adoption will increase across industries. 🔹 AI Explainability Will Become a Business Imperative – Organizations will be required to provide clearer insights into how AI models process personal data.

Final Thoughts: Building Trust in AI Through Privacy-First Governance

AI-driven innovation doesn’t have to come at the cost of privacy and security. By implementing privacy-by-design principles, adopting privacy-preserving AI techniques, and ensuring compliance with global AI regulations, businesses can build trustworthy AI systems that prioritize both innovation and ethical responsibility.