Learn how Deepeval’s rich test suites and the TRACE framework combine to convert raw fairness, privacy, and robustness metrics into clause‑aligned proof for the EU AI Act, NIST RMF, and ISO 42001.

63% of stalled AI initiatives cite lack of executive alignment—not model performance—as the primary obstacle.”. — MIT Sloan study

Data‑science teams can generate thousands of metrics, yet regulators and risk committees still ask a simple question:

Where’s the proof?

Open‑source tooling is the fastest way to narrow that metrics‑to‑evidence gap—but only if the metrics arrive wrapped in context, lineage, and immutable audit trails.

⚠️ Why Open‑Source Metrics Alone Fall Short

Open‑source evaluation tools like Deepeval generate powerful metrics—but raw scores aren't enough when compliance is on the line.

📉 Scores Without Context

A 0.93 F1 score means nothing if you can’t trace the dataset, risk tier, or policy threshold it relates to.

🖼️ Screenshots Fade

Static charts and logs can't stand up to regulatory scrutiny years later. Evidence must be replayable and verifiable, even after teams change.

📚 Binders Break Velocity

Manually compiling PDFs and spreadsheets for every audit cycle slows down releases and inflates compliance overhead.

🧭 Regulations Now Require More

Frameworks like the EU AI Act, NIST RMF, and ISO 42001 demand:

- Traceability across datasets, models, and metrics

- Continuous Monitoring of AI behavior and risk

- Accountable Sign‑off with auditable, role-based evidence

→ Open metrics need structured wrappers to become compliance-grade. TRACE delivers that wrapper.

🧪 Deepeval in a Nutshell

🔍 Fairness

- Demographic Parity

- Equalized Odds

- Predictive Parity

🛡️ Robustness

- Adversarial Perturbation Checks

- Out-of-Distribution (OOD) Stress Tests

🔐 Privacy

- Membership Inference Risk

- Differential Privacy (DP) Budget Tracking

🧩 Extensible by Design

Plug-in architecture enables custom metrics for regulated domains like finance, healthcare, and HR.

📤 Output Format

JSON artifacts + plots = ideal for testing and iteration

🚫 But insufficient for compliance without traceability, policy mapping, and audit sealing.

📘 TRACE: From Numbers to Narrative

TRACE isn’t just a framework—it’s the operational backbone of Responsible AI governance.

It transforms raw metrics into audit-ready artifacts by organizing assurance into five foundational pillars:

Trust • Risk • Action • Compliance • Evidence

Every evaluation run is sealed into a cryptographically signed Evidence Package—creating proof you can replay, not just report.

🔍 What TRACE Captures

- Purpose & Risk Class – Why the model exists, and how it's risk-ranked

- Lineage Data – Dataset hashes, model version, and environment fingerprint

- Policy Context – Linked thresholds, failure criteria, and regulatory clauses (EU AI Act, NIST RMF, ISO 42001)

- Reviewer Metadata – Identity, role, sign-off time, and notes

🧾 The Output

- ✅ Human-readable Scorecard – Clear risk heatmaps, compliance checklist, TRACE‑RAI readiness status

- ⚙️ Machine-readable Manifest (YAML) – Perfect for CI hooks, vendor submissions, or evidence archives

✨ Feature Highlights

🗺️ Smart Risk Map

Spot fairness, privacy, robustness, and safety risks in seconds—before they reach production or trigger compliance incidents.

📋 Compliance‑Ready Scorecards

Automatically map your metrics to key regulatory frameworks—EU AI Act, NIST RMF, HITRUST, ISO 42001, and more.

See exactly which clauses are met—and what still needs action.

🎯 Tailored Action Cards

Context-aware next steps, personalized by role:

- Engineers – Targeted fixes with direct links to failed tests

- Risk & Compliance – Suggested controls and risk notes

- Executives – One-click "Ready / Not Ready" summary

🔒 Zero Raw‑Data Touch

TRACE reads metrics only—no training data, no PII, no model weights—making it privacy-safe by design.

🏛️ Trusted Across Regulated Industries

Adopted in finance, healthcare, government, and enterprise tech to cut audit prep time and reduce go-to-market friction.

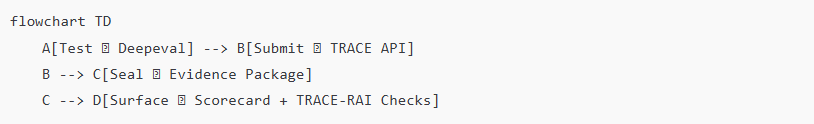

🔄 How Deepeval × TRACE Work Together

- Test — Run Deepeval inside a notebook, CI/CD pipeline, or scheduled job; export fairness, privacy, and robustness metrics as JSON.

- Submit — Send the metrics + contextual metadata (e.g. model version, dataset hash, use case) to the TRACE Metrics API.

(~10 lines of code) - Seal — TRACE signs an immutable Evidence Package, aligns it to regulatory clauses, and computes the TRACE‑RAI Score.

- Surface — Push results to:

- Pull Requests (as merge-blocking checks),

- TrustCenter portals (for buyers, auditors, execs),

- Vendor assessments (via exportable scorecards).

🕒 Total cycle time: under 10 minutes.

🧭 Regulatory Alignment at a Glance

| Framework | How Deepeval Helps | How TRACE Completes the Chain |

|---|---|---|

| EU AI Act | Article 15 robustness & accuracy validated via stress tests | Dataset lineage + replay scripts sealed in a cryptographically signed Evidence Package |

| NIST RMF | Metrics support the Measure (ME) function | Metrics cataloged for Assess and Manage, with continuous monitoring |

| ISO 42001 | Clause 8.4: model monitoring via nightly test runs | Annex A control records generated and surfaced through TRACE Scorecards |

🏦 Credit Model Governance: A Composite Industry Pattern

Challenge — Regulated fintech teams face mounting pressure to deploy credit-scoring models that satisfy EU requirements around fairness, robustness, and transparency—across multiple jurisdictions.

Approach — In one representative case, a fintech compliance team integrated Deepeval into their model retraining cycles, flagging demographic bias and accuracy drift. Outputs were piped to TRACE, tagging dataset lineage, risk tier, and use purpose.

Impact —

- Audit prep time dropped from weeks to days.

- Evidence packages were reused across quarterly reviews, cutting redundancy.

- The team launched on time—without compromising regulatory scope or holding up product delivery.

✅ Adoption Patterns That Stick

- Contract Tests in CI — Block merges automatically when Deepeval results breach TRACE‑RAI thresholds.

- Domain Packs — Finance, healthcare, and HR add‑ons preload context-aware sector checks and regulatory clauses.

- TrustCenter Publishing — Read‑only TRACE Scorecards and Responsible Scores reduce due-diligence email ping-pong.

- TRACE‑RAI Score — A dynamic, role-aware indicator of AI readiness—tailored for engineers, legal, and executive review.

- Drift Watch — Nightly Deepeval probes + weekly TRACE snapshots help flag regressions before they escalate.

🔑 Key Takeaways

- Open‑source metrics gain enterprise gravity when wrapped in traceable, replayable evidence.

- Deepeval × TRACE = 10 lines of code → weeks of audit readiness—no infrastructure overhaul needed.

- Compliance isn’t about scores—it’s about proof. TRACE seals metrics into clause‑aligned, audit‑ready packages.

- The TRACE‑RAI Score distills technical results into a single readiness view—engineers see failing tests, risk teams get control prompts, execs get a go/no‑go.

- Early adopters report ≤60% faster audits, reduced vendor friction, and fewer delayed model launches.